I went to an all-boys highschool — Christian Brothers Academy — the place the closest factor we needed to a safety system have been hallway screens yelling at you to tuck in your shirt.

The one critical menace we confronted was nuclear extinction, which was someway averted from hiding underneath a desk.

However for many years now, faculty security in lots of U.S. faculties has meant steel detectors on the entrance doorways, cameras within the hallways and typically even officers patrolling the halls.

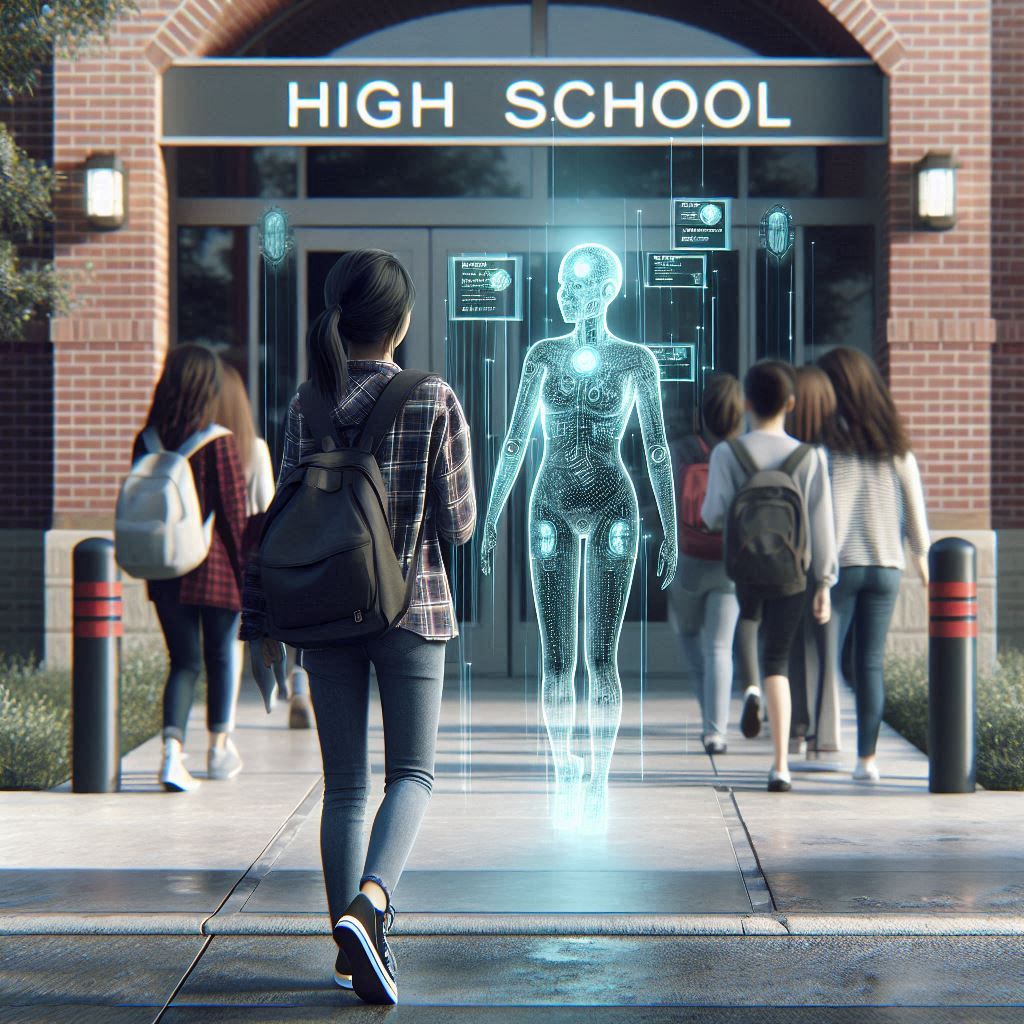

And lately, a completely new layer of security is being added.

Throughout the nation, districts are deploying synthetic intelligence to observe scholar chats, scan social media, detect weapons and flag potential threats earlier than an incident can happen.

Proponents say these instruments can determine threats sooner than any human, shopping for valuable seconds in an emergency.

However critics warn that these identical techniques could be alarmist, intrusive, and — when the AI makes a foul name — deeply damaging for the scholars who’re wrongly implicated.

And there’s mounting proof that each views could be proper…

Digital Surveillance Goes Reside

In recent times, hundreds of U.S. faculties have licensed AI-powered monitoring platforms like Gaggle and Lightspeed Alert.

These cloud-based companies combine straight with school-issued electronic mail, paperwork and chat apps, primarily functioning like an automatic corridor monitor for the digital world.

And so they work by consistently scanning scholar messages and recordsdata for key phrases and phrases linked to violence, self-harm, bullying or different security issues.

When one thing triggers the system, an alert is shipped to high school workers to allow them to determine whether or not to intervene.

You possibly can clearly see the promise of those AI instruments. Early intervention can save lives.

However the actuality of their effectiveness is much extra difficult.

For instance, a 13-year-old in Tennessee was arrested after Gaggle flagged a joke a few faculty capturing the scholar made in a non-public chat.

That message set off a sequence of occasions that included an interrogation and a strip search.

And it led to the scholar being positioned underneath home arrest.

Native authorities stated they acted “out of warning.” However privateness advocates referred to as it a textbook case of overreach.

In Lawrence, Kansas, directors reviewed over 1,200 Gaggle alerts throughout a 10-month span.

And it seems that almost two-thirds of the incidents have been false alarms that have been flagged for issues like writing about “psychological well being” in a school essay, or as a result of an artwork mission referenced a weapon in a fictional context.

Due to incidents like these, the businesses behind these AI instruments say they’ve refined their algorithms to scale back pointless flags. Some phrases, like LGBTQ references, have been eliminated after bias complaints.

However civil liberties teams argue that the underlying problem remains to be there.

The very fact is, regular teenage habits can usually be interpreted as harmful.

And now that each keystroke could be monitored, there’s a far higher likelihood that odd errors any child would possibly make may very well be handled as threats.

However for a lot of faculties, it’s value that threat. And digital surveillance is only one layer of college safety offered by AI.

In East Alton-Wooden River Excessive Faculty in Illinois, an Evolv Categorical AI-powered weapons detection system was put in to scan college students as they entered the constructing.

Over the course of roughly 17,678 entries, the system generated 3,248 alerts. But solely three of them turned out to be harmful contraband.

That’s a false-positive fee above 99%.

However district officers say the system is value utilizing as a result of it forces college students to suppose twice earlier than bringing something questionable into the college.

ZeroEyes is an AI platform that makes use of video to scan dwell safety footage for firearms. When it thinks it sees one, an alert is shipped to a human reviewer earlier than being forwarded to police.

The corporate insists that conserving a human reviewer within the loop limits false alarms.

But a latest Statescoop investigation discovered that its alerts have triggered lockdowns over innocent objects, together with a scholar strolling in with an umbrella.

Regardless of these false alarms, ZeroEyes has been applied in faculties throughout 43 states.

One district to regulate is Loudoun County, Virginia, which started rolling out an AI platform referred to as VOLT this summer season.

Slightly than attempting to determine particular person college students, VOLT’s algorithms are educated to identify suspicious actions, just like the movement of somebody drawing a firearm.

Any alerts are then handed to high school safety workers, who assessment the footage earlier than deciding whether or not to behave.

Officers argue this reduces privateness issues and helps reduce down on false positives. Which looks like a win-win.

However regardless of how superior the expertise, these AI techniques aren’t infallible.

Final 12 months in Nashville, an Omnilert system did not detect an actual shooter’s weapon at Antioch Excessive Faculty.

Horrifically, a scholar was killed. It’s a tragic reminder that when AI will get it mistaken, the implications could be devastating.

Right here’s My Take

To me, the principle query isn’t whether or not AI will help maintain faculties safer…

It’s how a lot threat is society prepared to tackle in alternate for that security.

As a result of there’s a privateness trade-off with all these AI-powered safety platforms.

I perceive that false positives can traumatize college students. However false negatives can price lives.

So I imagine AI-enhanced safety is the logical subsequent step.

However faculty districts can’t afford to “set and neglect” these techniques. They should be paired with clear insurance policies and fixed analysis of what’s working and what’s not.

I’m assured that the expertise will enhance. And inside the subsequent 5 years, AI surveillance will probably be as widespread in American faculties as pizza within the cafeteria.

The problem is ensuring that adoption doesn’t come at the price of belief.

As a result of whether or not it’s a big public highschool or my very own small alma mater, the objective needs to be the identical…

A faculty that looks like a spot to study, not a spot to be policed.

Regards,

Ian King

Chief Strategist, Banyan Hill Publishing

Editor’s Word: We’d love to listen to from you!

If you wish to share your ideas or ideas in regards to the Day by day Disruptor, or if there are any particular subjects you’d like us to cowl, simply ship an electronic mail to [email protected].

Don’t fear, we gained’t reveal your full identify within the occasion we publish a response. So be at liberty to remark away!