Both Nvidia (NASDAQ: NVDA) and Micron Technology (NASDAQ: MU) have been highly profitable investments in the past year, with their share prices rising rapidly thanks to the way artificial intelligence (AI) has supercharged their businesses.

While Nvidia stock has gained 255% in the past year, Micron’s gains stand at 91%. If you are looking to add one of these two AI stocks to your portfolio right now, which one should you be buying?

The case for Nvidia

Nvidia has been the go-to supplier of graphics processing units (GPUs) for major cloud computing companies looking to train AI models. The demand for Nvidia’s AI GPUs was so strong last year that its customers were waiting for as long as 11 months to get their hands on the company’s hardware, according to investment bank UBS.

Nvidia’s efforts to manufacture more AI chips and meet this heightened customer demand have been successful, allowing it to lower the wait time to three to four months. This goes some way to explaining why the company’s growth has been stunning in recent quarters.

In the fourth quarter of fiscal 2024 (for the three months ended Jan. 28), Nvidia’s revenue shot up a whopping 265% year over year to $22.1 billion. Meanwhile, non-GAAP (generally accepted accounting principles) earnings jumped 486% to $5.16 per share.

That eye-popping growth is set to continue in the current quarter. Nvidia’s revenue estimate of $24 billion for the first quarter of fiscal 2025 would translate into a year-over-year gain of 233%.

Analysts are expecting the company’s earnings to jump to $5.51 per share in the current quarter, which would be a 5x increase over the year-ago period’s reading of $1.09 per share. Even better, Nvidia should be able to sustain its impressive momentum for the rest of the fiscal year as well, especially since it expects the demand for its upcoming AI GPUs based on the Blackwell architecture to exceed supply.

Nvidia management pointed out on its February earnings conference call that, although the supply of its current-generation chips is improving, the next-generation products will be “supply constrained.” That won’t be surprising, as Nvidia’s upcoming AI GPUs, which will start shipping later in 2024, are reportedly four times more powerful when training AI models as compared to the current H100 processor.

Nvidia also expects a 30 times increase in the AI inference performance with its Blackwell AI GPUs. This should help it cater to a fast-growing segment of the AI chip market, as the market for AI inference chips is expected to grow from $16 billion in 2023 to $91 billion in 2030, according to reporting from Verified Market Research.

The good part is that customers are already lining up for Nvidia’s Blackwell processors, with Meta Platforms expecting to train future generations of its Llama large language model (LLM) using the latest chips.

All this explains why analysts are forecasting Nvidia’s earnings to increase from $12.96 per share in fiscal 2024 to almost $37 per share in fiscal 2027. That translates into a compound annual growth rate (CAGR) of almost 42%, suggesting that Nvidia could remain a top AI growth play going forward.

The case for Micron Technology

The booming demand for Nvidia’s AI chips is turning out to be a solid tailwind for Micron Technology. That’s because Nvidia’s AI GPUs are powered by high-bandwidth memory (HBM) from the likes of Micron.

On its recent earnings conference call, Micron pointed out that Nvidia will be deploying its HBM chips in the H200 processors, which are set to be available to customers beginning in the second quarter. Micron management also added that it is “making progress on additional platform qualifications with multiple customers.”

The demand for Micron’s HBM chips is so strong that it has sold out its entire capacity for 2024. What’s more, the memory specialist says that “the overwhelming majority of our 2025 supply has already been allocated,” indicating that AI will continue to drive robust demand for Micron’s chips.

Micron is also looking to push the envelope in the HBM market. The company has started sampling a new HBM chip with 50% higher memory, which will allow the likes of Nvidia to make more powerful AI chips going forward. As such, booming HBM demand will positively impact Micron’s financials, with the company estimating that it will “generate several hundred million dollars of revenue from HBM in fiscal 2024.”

The HBM market is expected to generate almost $17 billion in revenue this year — accounting for 20% of overall DRAM industry revenue — as compared to $4.4 billion in 2023. Even better, it could keep getting bigger in the long run on the back of the fast-growing AI chip market. As a result, Micron should be able to sustain the impressive growth it has started clocking now.

The company’s revenue in the recently reported fiscal second quarter of 2024 (which ended on Feb. 29) was up 57% year over year to $5.82 billion. The revenue guidance of $6.6 billion for the ongoing quarter would be a bigger jump of 76% from the year-ago period. So, Micron’s aggressive AI-driven growth is just getting started.

The verdict

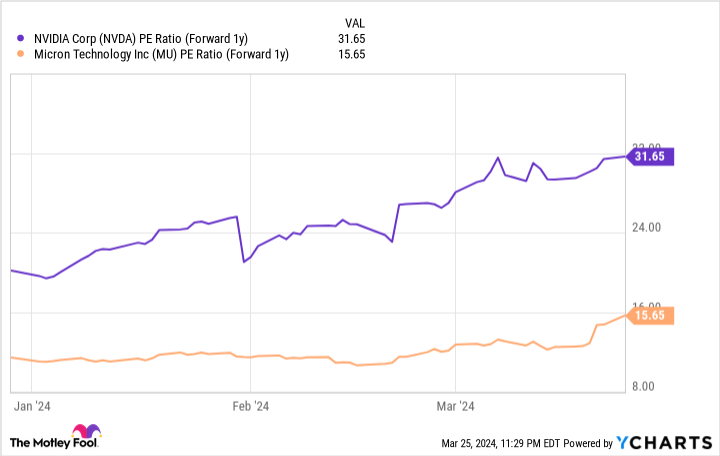

Given the data above, Micron may turn out to be the better AI play compared to Nvidia because of one simple reason: At almost 37 times sales, Nvidia stock is way more expensive when compared to Micron’s price-to-sales ratio of 6.4. Also, Micron is significantly cheaper than Nvidia as far as the forward earnings multiple is concerned.

Of course, Nvidia is growing at a much faster pace than Micron, and it could justify its expensive valuation by sustaining its terrific growth. However, investors looking for a cheaper way to play the AI boom are likely to consider Micron for their portfolios, considering its attractive valuation and the rapid growth it offers right now.

Should you invest $1,000 in Nvidia right now?

Before you buy stock in Nvidia, consider this:

The Motley Fool Stock Advisor analyst team just identified what they believe are the 10 best stocks for investors to buy now… and Nvidia wasn’t one of them. The 10 stocks that made the cut could produce monster returns in the coming years.

Stock Advisor provides investors with an easy-to-follow blueprint for success, including guidance on building a portfolio, regular updates from analysts, and two new stock picks each month. The Stock Advisor service has more than tripled the return of S&P 500 since 2002*.

See the 10 stocks

*Stock Advisor returns as of March 25, 2024

Randi Zuckerberg, a former director of market development and spokeswoman for Facebook and sister to Meta Platforms CEO Mark Zuckerberg, is a member of The Motley Fool’s board of directors. Harsh Chauhan has no position in any of the stocks mentioned. The Motley Fool has positions in and recommends Meta Platforms and Nvidia. The Motley Fool has a disclosure policy.

Better Artificial Intelligence (AI) Stock: Nvidia vs. Micron Technology was originally published by The Motley Fool